Rust is a new-ish programming lanuage that has the potential to displace other programming lanuage in the data engineering space. This is me getting on the ball and rolling into the brick wall that is Rust programming. The brick wall is just part of the discovery process.

The Test Case Function — Cryptocurrency Correlation

I wanted to see if I could build some Python extensions with Rust that could speed up a Lambda function running on AWS. The Lambda function will have an exposed endpoint via API Gateway. However, API has a non-adjust hard time-out of 30 seconds. This constrain is a perfect use case for when building Rust application makes sense. I am using the GO CDK to deploy these resources, and maybe I will write another article on this.

The function will collect multiple Cryptocurrency USD prices for the last day with a 5 minute interval from Yahoo Finance. Then a correlation matrix will be computed with close-prices, the close price at the end of each interval, between pairs of tickers. This correlation matrix will be returned by an API in JSON format.

Here is a list of popular cryptocurrencies that I used in the tests:

[

"BTC-USD",

"ETH-USD",

"USDT-USD",

"BNB-USD",

"XRP-USD",

"USDC-USD",

"SOL-USD",

"ADA-USD",

"DOGE-USD",

"TRX-USD",

"TON-USD",

"LINK-USD",

"AVAX-USD",

"MATIC-USD",

"DOT-USD",

"WBTC-USD",

"DAI-USD",

"LTC-USD",

"SHIB-USD",

"BCH-USD",

"LEO-USD",

"UNI-USD",

"OKB-USD",

"ATOM-USD",

"XLM-USD",

"TUSD-USD",

"XMR-USD",

"KAS-USD",

"ETC-USD",

"CRO-USD",

"LDO-USD",

"FIL-USD",

"HBAR-USD",

"ICP-USD",

"APT-USD",

"RUNE-USD",

"NEAR-USD",

"BUSD-USD",

"OP-USD",

"MNT-USD",

"VET-USD",

"MKR-USD",

"AAVE-USD",

"FTT-USD",

"ARB-USD",

"INJ-USD",

"RNDR-USD",

"QNT-USD"

]

The Python Implementation

For the python implementation, I am using a very naive approach, that is, if my intention was to create a function that worked as quickly as possible. I’m using the yfinance python library to collect one ticker at a time, sequentially. Currently, this library can only parallelize across cores, which won’t work in a Lambda. A better approach would have been to use the aiohttp library, but there are too many variations to do a comprehensive comparison. The yfinance library returns back one Pandas Dataframe for each request. The result can be merged into one large Dataframe. However, for this example, I merge two Dataframes at a time, then correlate the prices with numpy. Again, there are many other variations one could do that might improve speed.

app.py

The Python+Rust Implementation

Two modules were created in Rust: 1) an asynchronous http function to collect the raw text output from Yahoo Finance using the reqwest Rust library 2) I am using a custom Polar Rust function that correlates two Python Polars Series. For some reson the PyPolars correlation function uses numpy, which is strange. Python is used to collect the outputs from these function and act as an interface for the REST API.

app.py

lib.rs

Note that I am excluding all the other code use by the lib file for brevity.

Speed Test Results

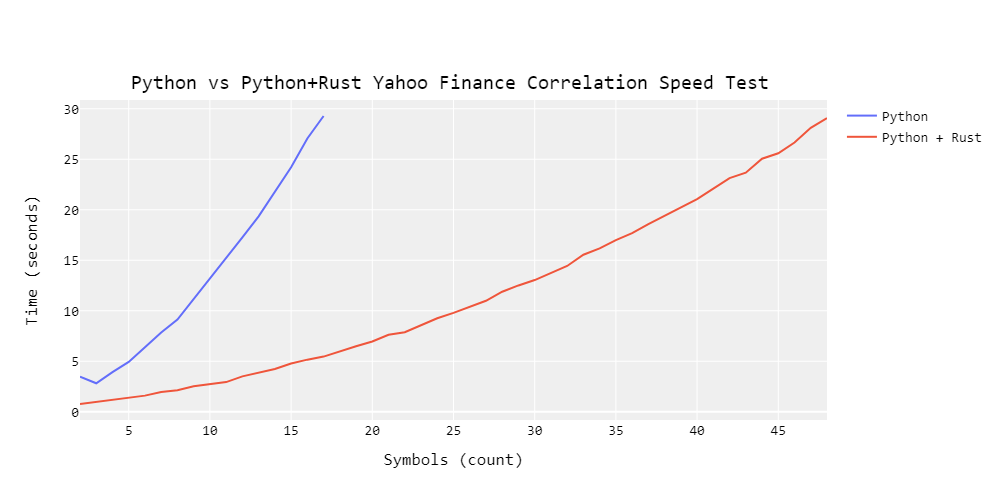

The plot below shows the speed comparisons betwee these two approaches, i.e., Python vs Python + Rust. Obviously, the Python+Rust approach was much faster than the naive Python approach.

It can be seen that the Python+Rust approach was able to collect and process all of the cryptocurrency symbols. Although, any more than 48 symbols would cause API Gateway to timeout. The naive Python approach was able to process and return a little more than 15 symbols in around 30 seconds.

My Rust Learning Summary

Here are a few notes/interpretations of my learnings while completing this project. I estimate that I put in around 40–60 hours to complete this project, which is a lot considering that I did this in one week. This is probably because the Rust learning curve is steep, and I didn’t previously have Rust experience. Here is a summary of my learnings:

- The various functionality of Maturin/Cargo CLI.

- The syntax of Pyo3, a tool used to create Rust binding for Python.

- How to call a Rust async function and return the result back to Python.

- How to use the Rust Polars dataframe library to process and return data.

- How to compile and build Rust binding in a Python AWS ECR container.

Originally, I had planned to build out the whole API in Rust, but I could have probably easily spent another 60+ hours to make this happen. Did I take a few short cuts? Yes. I did what worked, but luckily it seems that I got some really nice speed improvements. Here are a few items that I would need to figure out:

- How to work with Serde JSON output from the Reqwest library.

- How to pipe async JSON results into a Rust Polars dataframe

- Compile the Rust Polars crate locally because not all functions are available in the pubic crate for some reason.

- Learn how to use Cargo’s test functionality

- Build the whole API interface in an AWS ECR container.

- Build the whole API interface in an AWS ECR container.

- Build the whole API interface in an AWS ECR container.

^ I added the last item three times to show my frustration with how long it took me to make Rust bindings work in a CentOS. The worst thing was that I had encountered a ERROR-NO-ERROR-MESSAGE where I have to debug something that has no error. I had spent the most time trying to figure out why an extension would work locally on my Windows 11 machine, but not on the CentOS docker container. Luckily, I found a work around, but I still do not understand the mechanics for why extension did not work intially.

Cargo, Rust, and PyO3 in an ECR Python Docker Image

I’m sharing the Dockerfile that took me an embrassingly long time to figure out. At the time of writing this article, there didn’t seem to be a working example for this task yet.

# Use the official AWS Lambda Python 3.10 base image

FROM public.ecr.aws/lambda/python:3.10

# Update the package manager and install necessary packages

RUN yum update -y && \

yum install -y zip unzip jq openssl-devel gcc

# Install Rust and Cargo

RUN curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh -s -- -y && \

echo "Adding Rust binaries to the PATH" && \

export PATH="/root/.cargo/bin:${PATH}"

# Install build dependencies

RUN yum install -y gcc

# Copy Rust and Python project files

copy Cargo.toml Cargo.toml

copy pyproject.toml pyproject.toml

COPY src ./src/

COPY requirements.txt .

# Install Python dependencies

RUN pip install --no-cache-dir -r requirements.txt

# Build the Rust extension using Maturin

RUN maturin build --sdist --release && \

pip install target/wheels/*.whl

# Copy Python files

COPY app.py ./

# Set the default command to run the Lambda function

CMD [ "app.lambda_handler" ]

There are no garentees that this Dockerfile will work with future versions of Maturin. The main issue I encountered here was building and installing the extensions in a way that made them available to the base Python used to run the Lambda function. You see, maturin develop requires a python virtual environment which would not be available to the Lambda app. The solution was to build and install a source distribution in the Docker build phase.

For completeness, here is my requirements.txt file:

pyarrow==12.0.1

polars==0.19.15

maturin[patchelf]==1.3.0

cffi

Conclusion

There definitely seems to be valid use cases to using Rust binding to speed up Python code. While the development time for Rust programs was significantly higher, I am sure that with more experience that I will be able to iterate on solutions much faster. However, the compile time for testing code is still slow (it can take around 20–30 seconds for a Rust program to compile). There also isn’t very many examples in the documentation or StackOverflow support yet. This is another reason why taking on a Rust project might not be the best approach.

If you are interested in building Rust bindings for Python, here are some introductory resources: